Eelco Visser

The most shocking event of this year is the unfortunate passing of my former PhD supervisor: Eelco Visser. I still find it hard to believe that he is gone.

Although I left the university for quite some time now, the things I learned while I was employed at the university (such as having all these nice technical discussions with him) still have a profound impact on me today. Moreover, without his suggestion this blog would probably not exist.

Because the original purpose of my blog was to augment my research with extra details and practical information, I wrote a blog post with some personal anecdotes about him.

COVID-19 pandemic

In my previous blog reflection, I have explained that we were in the third-wave of the COVID pandemic caused by the even more contagious Omicron variant of the COVID-19 virus. Fortunately, it turned out that, despite being more contagious, this variant is less hostile than the previous Delta variant.

Several weeks later, the situation got under control and things were opened up again. The situation remained pretty stable afterwards. This year, it was possible for me to travel again and to go to physical concerts, which feels a bit weird after staying home for two whole years.

The COVID-19 virus is not gone, but the situation is under control in Western Europe and the United States. There have not been any lockdowns or serious capacity problems in the hospitals.

When the COVID-19 pandemic started, my employer: Mendix adopted a work-from-home-first culture. By default, people work from home and if they need to go to the office (for example, to collaborate) they need to make a desk reservation.

As of today, I am still working from home most of my time. I typically visit the office only once a week, and I use that time to collaborate with people. In the remaining days, I focus myself on development work as much as possible.

I have to admit that I like the quietness at home -- not everything can be done at home, but for programming tasks I need to think, and for thinking I need silence. Before the COVID-19 pandemic started, the office was typically very noisy making it sometimes difficult for me to focus.

Learning modern JavaScript features

I used to intensively work with JavaScript at my previous employer: Conference Compass, but since I joined Mendix I am mostly using different kinds of technologies. During my CC days, I was still mostly writing old fashioned (ES5) JavaScript code, and I still wanted to familiarise myself with modern ES6 features.

One of the challenging aspects of using JavaScript is asynchronous programming -- making sure that the main thread of your JavaScript application never blocks too long (so that it can handle multiple connections or input events) and keeping your code structured.

With old fashioned ES5 JavaScript code, I had to rely on software abstractions to keep my code structured, but with the addition of Promises/A+ and the async/await concepts to the core of the JavaScript language, this can be done in a much cleaner way without using any custom software abstractions.

In 2014, I wrote a blog post about the problematic synchronous programming concepts in JavaScript and their equivalent asynchronous function abstractions. This year, I wrote a follow-up blog post about the ES6 concepts that I should use (rather than software abstractions).

To motivate myself learning about ES6 concepts, I needed a practical use case -- I have ported the layout component of my web framework (for which a Java and PHP version already exist) to JavaScript using modern ES6 features, such as async/await, classes and modules.

An interesting property of the JavaScript version is that it can be used both on the server-side (as a Node.js application) and client-side (directly in the browser by dynamically updating the DOM). The Java and PHP versions only work server-side.

Fun projects

In earlier blog reflections I have also decided to spend more time on useless fun projects.

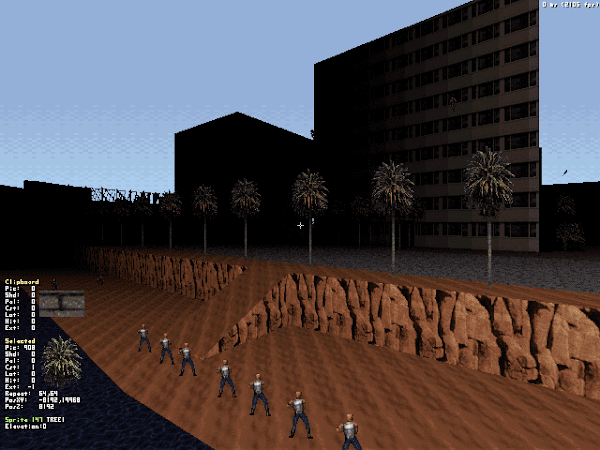

In the summer of 2021, when I decided not to do any traveling, I had lots of time left to tinker with all kinds of weird things. One of my hobby projects was to play around with my custom maps for Duke3D and Shadow Warrior that I created while I was still a teenager.

While playing with these maps, I noticed a number of interesting commonalities and differences between Duke3D and Shadow Warrior.

Although both games use the same game engine: the BUILD-engine, their game mechanics are completely different. As an exercise, I have ported one of my Duke3D maps to Shadow Warrior and wrote a blog post about the process, including a description of some of their different game mechanics.

Although I did the majority of the work already back in 2021, I have found some remaining free time in 2022 to finally finish the project.

Web framework improvements

This year, I have also intensively worked on improving several aspects of my own web framework. My custom web framework is an old project that I started in 2004 and many parts of it have been rewritten several times.

I am not actively working on it anymore, but once in a while I still do some development work, because it is still in use by a couple of web sites, including the web site of my musical society.

One of my goals is to improve the user experience of the musical society web site on mobile devices, such as phones and tablets. This particular area was already problematic for years. Despite making the promise to all kinds of people to fix this, it took me several years to actually take that step. :-).

To improve the user experience for mobile devices, I wanted to convert the layout to a flexbox layout, for which I needed to extend my layout framework because it does not generate nested divs.

I have managed to improve my layout framework to support flexbox layouts. In addition, I have also made many additional improvements. I wrote a blog post with a summary of all my feature changes.

Nix-related work

In 2022, I also did Nix-related work, but I have not written any Nix-related blog posts this year. Moreover, 2022 is also the first time since the end of the pandemic that a physical NixCon was held -- unfortunately, I have decided not to attend it.

The fact that I did not write any Nix-related blog posts is quite exceptional. Since 2010, the majority of my blog posts are Nix-related and about software deployment challenges in general. So far, it has never happened that there has been an entire year without any Nix-related blog posts. I think I need to explain a thing or two about what has happened.

This year, it was very difficult for me to find the energy to undertake any major Nix developments. Several things have contributed to that, but the biggest take-away is that I have to find the right balance.

The reason why I got so extremely out of balance is that I do most of my Nix-related work in my spare time. Moreover, my primary motivation to do Nix-related work is because of idealistic reasons -- I still genuinely believe that we can automate the deployment of complex systems in a much better way than the conventional tools that people currently use.

Some of the work for Nix and NixOS is relatively straight forward -- sometimes, we need to package new software, sometimes a package or NixOS service needs to be updated, or sometimes broken features need to be fixed or improved. This process is often challenging, but still relatively straight forward.

There are also quite a few major challenges in the Nix project, for which no trivial solutions exist. These are problem areas that cannot be solved with quick fixes and require fundamental redesigns. Solving these fundamental problems is quite challenging and typically require me to dedicate a significant amount of my free time.

Unfortunately, due to the fact that most of my work is done in my spare time, and I cannot multi-task, I can only work on one major problem area at the time.

For example, I am quite happy with my last major development project: the Nix process management framework. It has all features implemented that I want/need to consistently eat my own dogfood. It is IMHO a pretty decent solution for use cases where most conventional developers would normally use Docker/docker-compose for.

Unfortunately, to reach all my objectives I had to pay a huge price -- I have published the first implementation of the process management framework already in 2019, and all my major objectives were reached in the middle of 2021. As a consequence, I have spend nearly two years of my spare time only working on the implementation of this framework, without having the option to switch to something else. For the first six months, I remained motivated, but slowly I ran into motivational problems.

In this two-year time period, there were lots of problems appearing in other projects I used to be involved in. I could not get these projects fixed, because these projects also ran into fundamental problems requiring major redesigns/revisions. This resulted in a number of problems with members in the Nix community.

As a result, I got the feeling the I lost control. Moreover, doing anything Nix-related work also gave (and in some extent still gives) me a lot of negative energy.

Next year, I intend to return and I will look into addressing my issues. I am thinking about the following steps:

- Leaving the solution of some major problem areas to others. One of such areas is NPM package deployments with Nix. node2nix's was probably a great tool in combination with older versions of NPM, but its design has reached the boundaries of what is possible already years ago.

As a result, node2nix does not support the new features of NPM and does not solve the package scalability issues in Nixpkgs. It is also not possible to properly support these use cases by implementing "quick fixes". To cope with these major challenges and keep the solution maintainable, a new design is needed.

I have already explained my ideas on the Discourse mailing list and outlined what such a new design could look like. Fortunately, there are already some good initiatives started to address these challenges. - Building prototypes and integrate the ideas into Nixpkgs rather than starting an independent project/tool that attracts a sub community.

I have implemented the Nix process management framework as a prototype with the idea to show how certain concepts work, rather than advertising the project as a new solution.

My goal is to write an RFC to make sure that these ideas get integrated into the upstream Nixpkgs, so that it can be maintained by the community and everybody can benefit from it.

The only thing I still need to do is write that RFC. This should probably be one of my top priorities next year. - Move certain things out of Nixpkgs. The Nixpkgs project is a huge project with several thousands of packages and services, making it quite a challenge to maintain and implement fundamental changes.

One of the side effects of its scale is that the Nixpkgs issue tracker is a good as useless. There are thousands of open issues and it is impossible to properly track the status of individual aspects in the Nixpkgs repository.

Thanks to Nix flakes, which unfortunately is still an experimental feature, we should be able to move certain non-essential things out of Nixpkgs and conveniently deploy them from external repositories. I have some things that I could move out of the Nixpkgs repository when flakes have become a mainstream feature. - Better communication about the context in which something is developed. When I was younger, I always used to advertise a new project as the next great thing that everybody should use -- these days, I am more conservative about the state of my projects and I typically try to warn people upfront that something is just a prototype and not yet ready for production use.

Blog posts

In my previous reflection blog posts, I always used to reflect over my overall top 10 of most popular blog posts. There are no serious changes compared to last year, so I will not elaborate about them. The fact that I have not been so active on my blog this year has probably contributed that.

Concluding remarks

Next year I will look into addressing my issues with Nix development. I hope to return to my software deployment/Nix-related work next year!

The final thing I would like to say is:

HAPPY NEW YEAR!!!